Find the answers to some of the most common questions we receive at Gatehouse Satcom.

What sets 5G New Radio apart from previous generations of wireless technology?

When should we expect the NTN MBB services to be commercially available in scale?

What’s the advantage of regenerative architecture?

With Ku-band support in Rel.19, can we assume higher throughput for eMBB compared to 70/2mbps?

What is the need for new functional splits in regenerative architecture in R19? Would a LEO regenerative satellite be able to implement a payload with a full DU, considering it may need to serve many beams?

What are the bands for the extension in S and L-Band ?

Does the 3GPP standards support UEs without GNSS?

Can the NTN L-band bandwidth be reduced from 5MHz to e.g. 1MHz?

Will there be a convergence between terrestrial mobile operator and satellite for mobile broadband?

How is it going on measuring with an orbital testbed?

How many HARQ processes did you enable for the PTT settings?

What is the number of PTT users that can work at the same time?

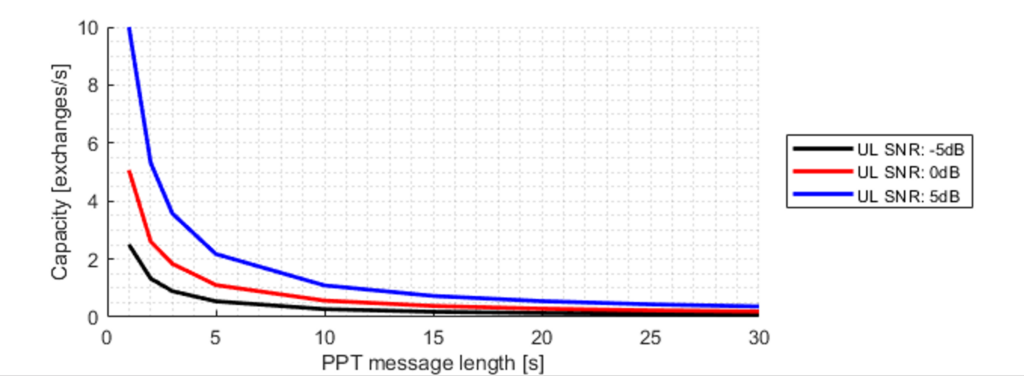

Here, we can see that in poor link conditions (DL -11 dB, UL -5 dB) we can achieve 0,4 exchanges / s of a 5s voice message.

If we consider that messaging takes place in pairs, this means that if it takes an aggregated 10 seconds to record and listen to a message, then a minimum of 4 such pairs can be active at once at any time.

Utilizing broadcasting functionality (SC-PTM) one can obtain much higher scalability on the receiving end. Then a minimum of >4 groups of UEs can take part in a PTT session (assuming some tokenization of the “speaker”)

Here, we can see that in poor link conditions (DL -11 dB, UL -5 dB) we can achieve 0,4 exchanges / s of a 5s voice message.

If we consider that messaging takes place in pairs, this means that if it takes an aggregated 10 seconds to record and listen to a message, then a minimum of 4 such pairs can be active at once at any time.

Utilizing broadcasting functionality (SC-PTM) one can obtain much higher scalability on the receiving end. Then a minimum of >4 groups of UEs can take part in a PTT session (assuming some tokenization of the “speaker”) Are the messaging services and the PTT services still supported for mobile-on-the move when GNSS signals are jammed?

What is the number of satellites on the LEO constellation to obtain those delays?

Would all the standard compliant devices be able to access two-way messaging?

What are some benefits of using NB-IoT instead of a broadband solution?

Will 5G NR NTN replace 5G NB-IoT NTN in the future?

Will 5G New Radio NTN be based on standards?

What are the benefits of incorporating NTN into 5G New Radio?

How does Gatehouse Satcom contribute to the realization of 5G New Radio NTN?

What are the use cases for 5G New Radio from NTN (Non-terrestrial networks)?

How critical is the control of interferences in the satellite ground station between 5G spectrum and the satellite communications?

How much gain do you really need for a satellite?

Which functionalities of the 5G NTN gNB would be suitable to run directly on satellite?

When can I buy a neatly packaged 5G New Radio base station software stack from you?

What is the technical difference in terminal device comply with 3GPP REL17 between working with LEO satellite and GEO satellite in relation to 5G New Radio NTN? Is the difference only antenna performance?

Is 5G New Radio NTN more suitable for GEO or LEO satellites?

Is Release 17 all about NB-IoT and how can I make a business case based on the release?

In the transparent architecture, would phased arrays used in the gateway in the feeder link or in the satellite?

Are multiple beamforming used to have better capacity and performance in service link and feed link?

Where is the 5G core located in the transparent system architecture?

How can GEO satellites support 5G backhaul?

Will directional antennas and increase of the link budget help increase the bandwidth?

How much GEO satellite resources (EIRP) were used to close the direct to handset links? How many customers could be hosted on a typical spot beam?

- Spot EIRP --> DL CNR

- 81.6 dBm --> -3.3 dB

- 76.1 dBm --> -8.5 dB

- 84.4 dBm --> -2.2 dB

What is the minimum transmit power requested for an IoT device ?

What is the smallest bandwidth for NB-IoT downlink?

What is the potential of the maritime use-case from a mobile network operator’s perspective?

What pre-release-17 features would be advantageous for 5G NTN in GEO?

- R14: Non-anchor RACH & Paging

- R15: Early Data Transmission

- R15: Wake Up Signal

- R15: SR HARQ disabling

- R16: Group WUS

- R17: 16QAM

What performance can be achieved with 5G NTN on GEO in terms of latency and throughput?

Which architecture is more applicable to legacy GEO – transparent or regenerative?

How do I find out if a GEO satellite already in-orbit would be capable for 5G NTN?

What is required of legacy GEO satellites in-orbit to support 5G NTN?

- A channel bandwidth of 200kHz is required for both the service and feeder link – although if the satellite has regenerative capabilities the spectrum requirement for the feeder link can be diminished.

- Note: that potentially multiple legacy system channels can be used to form the 200kHz channel if they are contiguous and filter notches do not have a detrimental effect on the performance.

- Check TR36.763 Sec 6.2.2 (case 1, 4 & 7) for indicative link budget results for GEO satellites. Indicative performance of an NTN NB-IoT system was included in the slides. The satellite should be able to deliver an appropriate spot EIRP.

- Note: that power budget can be saved on legacy GEO satelittes by utilizing quasi-fixed earth cell topology. Here the cells are turned ON and OFF in scheduled manner, allowing UEs to turn of AS functions between overage perods AND allowing the GEO satellite to save power

Are there standardized models for fading simulation made by 3GPP as well?

Yes, 3GPP has standardised CDL and TDL fading models for NTN based on the “IST winner II” model.

How will the beamforming be applied from the satellite?

In NTN IoT the goal is to reuse the hardware platforms of terrestrial cellular. So the UEs are essentially similar to handheld devices with an omnidirectional antenna. Beamforming may be applied from the satellite site to orient the beam towards a specific geolocation for the “earth-fixed cell” scenario.

What has been done to minimize signaling overhead?

NB-IoT is a LPWAN, that is a low power wide area networks, such protocols are optimized for long-range transmissions of small data packets. Thus NB-IoT already has comparatively little signaling overhead compared to other protocols (which is why the feature set is also minimized). Further, GH is implementing DoNAS in it’s waveform and it is already implemented in the analysis.

How is your system coping with finding satellites when both devices and satellites are moving?

3GPP has defined functionality wrt. the channel raster such that UEs will always be able to look for, find and appropriately identify any available channel. The trick is to find an available cell by searching for that particular channel while in coverage of a serving satellite. This can be helped by satellite assistance information, which is a feature included in Rel-17.

Has Gatehouse Satcom done any study concerning potential interference between terrestrial component and NTN component within the same network?

We have not studied interference between TN and NTN. The networks should be seperated in frequency with appropriate guard bands handling Doppler shift in the NGSO case. The bands and channels allocated for NTN and TN are being etermined by standardization organisations like ITU, 3GPP and ETSI. As a general rule you can count on interference not being allowed.

What kinds of waveforms have you developed?

We have developed waveforms ranging from GMR-1 to DAMA protocols, to Inmarsat BGAN, to 5G NB-IoT – for military purposes as well as commercial services. If you´d like more details on a specific waveform, just let us know.

Why is NB-IoT based on 5G? Is there a technical limitation that prevented NB-IoT from working with 4G standards?

5G is a set of requirements for networks – as was 4G. In 5G one of the targeted use-cases is massive machine type communications (mMTC). The requirement for a 5G mMTC technology is that it must be able to service 1 million devices per km2 sending 32bytes of L2 data every 2 hr. After the requirements had been set, the development of the new technologies for 5G started. It was quickly found that NB-IoT and eMTC were sufficient for this requirement (terrestrially) given enough channels. Therefore these radio access networks are 5G compliant and hence now called 5G. In the backbone of the network there is a core network, here the 5G variant is called 5GC (5G core) and the 4G variant is called EPC (evolved packet core). Even though the RAN remains largely the same (but has developed over the 3GPP releases) there are some differences in base-station depending on whether it is interfacing with 5GC or EPC.

Can your feasibility study be used to determine the coverage and capacity of a satellite within a specific geographic area?

The feasibility study allows for ascertaining system level KPIs (System capacity, UE QoS (Throughput, latency) and UE energy consumption. This is done on the basis of the scenario definition – so it is indeed possible to define a specific geographic area, say the Himalayas and ascertain the performance of a Cell or a UE in that location.

What are the challenges of using transparent mode in NGSO systems?

We see two issues with the use of transparent mode in NGSO systems. 1) As the satellites are only visible from both ground station and user terminals in a relatively short time period it is only possible to obtain service in smaller time intervals. 2) Since ground stations must be located in the same satellite footprint as the user terminals there will be large parts of the earth surface like the oceans where is not possible to obtain services.

How complex is the integration of cellular core with satellite core?

GateHouse SatCom is building NodeB to be integrated in Satellite networks in three different scenarios:

1) on the ground supporting transparent mode,

2) in the satellite supporting in-orbit processing and regenerative mode, and

3) at the remote side supporting backhauling of 5G services establishing a remote cell.

We are working with suppliers of LTE and 5G Core Network, but unfortunately we have no insight into the complexity of integrating a cellular core into a satellite core.

There are already existing satellite core networks build on 3G and 4G cell core networks offering mobile data services.

Is 3GPP going to implement and approve DVB-S into 5G NR?

Even as a member of the 3GPP, Gatehouse Satcom does not have full insight into the future evolutions of 5G, but we follow it closely and use our influence accordingly. Changes and additions to the standards are agreed upon between contributing participants. We are also well aware of DVB-S, and in some backhauling solutions DVB-S is used over the satellite link to connect remote NodeB to the Core Network.

What is required of the devices in order to support NB-IoT?

It is expected that standard, off-the-shelf chipsets will be used, alongside with standard NB-IoT supporting devices which we know from terrestrial networks today. The 5G NB-IoT for space needs to be able to run on them, which will be enabled by supporting chipsets.

How can we be sure that our GEO system will be able to run Rel-17 compliant services?

Being able to support 5G NB-IoT services with a GEO system depends on various factors, all going back to your system infrastructure and set-up (e.g what bands you are operating on, what capacity you have, what user devices, which antennas are required, etc.). We can help you in generating a neutral, third party answer to this, with our 3GPP and NTN expertise we apply when developing the software for those systems. More specifically, we can help you in answering this question, by designing an individual pre-assessment or feasibility study based on your individual needs and system set-up. The goal is to verify the viability of supporting 5G NB-IoT, and to calculate the system capacity and business case, for example.

For this, we bring in our expertise for simulations of the link budget, and the assessment of the system capabilities. Pilot projects (lab tests, proof of concepts, and in orbit demonstrations) are also on our agenda, with the goal to pave your way to a commercial 5G NB-IoT NTN system.

Read more about our feasibility study and our 5G NTN Network Emulator

How will LEO satellites work with Rel-17?

3GPP Release 17 supports Non-Terrestrial connectivity using, for example, satellites in transparent mode. While connected in transparent mode, both service and feeder link must be active simultaneously to obtain service. Signals are mirrored by the satellite between user terminal and ground station. In the case of LEO satellites, connectivity to a ground station must be established before service can be provided to user terminals. Hence, connectivity is provided while the satellite is visible.

What is the difference between gNB and eNB?

eNB and gNB is the terminology for base-stations in 4G and 5G, respectively. NB-IoT is a 4G-based technology and was developed in 4(½)G – it does however fulfill the requirements for the 5G mMTC (massive machine type communications) scenario, which is why 3GPP decided to use NB-IoT (and eMTC) as technologies for 5G. So NB-IoT is BOTH 4G and 5G, which is why you will often find both eNB and gNB used in the context of NTN IoT. NB-IoT does interoperate with the 4G backhaul – Evolved Packet Core – from that perspective eNB should be the correct terminology.

Could delay be an issue in both a GSO and LEO constellation?

For delay-tolerant applications, the propagation delay is not an issue but for near-real time applications it could indeed be – control, alarms, etc. LEO accommodating as low as 40-100 ms exchanges and GSO in the order of seconds. The main difference for delay-tolerant applications with regards to the distance of the satellite would of course be the path loss and everything that follows in the satellite configuration to have an accommodating MCL.

What are the differences between LoRaWAN And NB-IoT?

NB-IoT is a LPWAN often compared to LoRaWAN. In general, LoRaWAN is limited in terms of possible QoS and the number of supported devices in comparison to NB-IoT, but LoRaWAN operates in unlicensed spectrum, which can reduce costs. In an NTN context: (1) the LoRa modulation would suffer at the long distances where only the very high spreading factors would work (without excessive tx power requirements) resulting in a low bit-rate and (2) the large coverage area of NTN cell means that possibly a large number of UEs would reside within the cell and here the ‘Aloha’ access mechanism of LoRaWAN is a severe limiting factor on the scalability – especially in tandem with low throughput (very long temporally) transmissions that are more likely to collide. On the plus-side, the LoRa modulation allows for direct Doppler compensation – no need for a SIB31.

Apart from NB-IoT, do you also expect in long-term higher data rates MTC over satellite? E.g. Rel. 17 feature RedCap also over NTN?

NB-IoT is a straightforward solution for NTN because it allows for coverage at a low SNR with a very small amount of signaling overhead and when NTN is rolled out, NB-IoT and eMTC will have an advantage due to the discontinuous coverage scenario – i.e. they are designed to work in NTN with only a few satellites present. eMTC may be be the first next-step up for NTN for MTC in terms of features and data-rates, but RedCap NR devices are also likely contenders for IoT over NTN. However, the NR rollout requires a larger satellite constellation with continuous coverage. On the other hand, NR NTN has a big push in its favor from future cellular handsets integrating NTN capabilities at a low cost – since they already are equipped with a GNSS, most of the required changes could be made with a firmware update and an antenna adjustment. So satellite/constellation rollout for NR NTN could end-up being very fast.

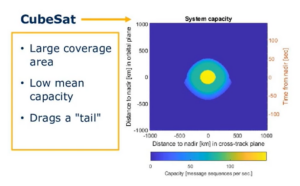

What is causing the trailing of capacity in a LEO cell? And why doesn’t the same thing happen when UE’s are on the leading edge of the moving cell and also consume higher level of channel resources?

As a LEO satellite approaches a UE, the UE must first detect the synchronization signals in the DL and synchronize to the cell before it can initiate a procedural exchange and take up resources within the cell. Since the satellite is moving towards the UE, once the UE is able to synchronize to the cell it will find itself in good link conditions within a few milliseconds and so UEs that begin a procedural exchange as soon as the are synchronized will mostly be in good conditions for the exchange. As the satellite moves away from the UE, we assume that the UE can maintain synchronicity – perhaps utilizing SIB31 for compensation – but if it initiates a procedural exchange now, then the link conditions deteriorate further during the procedural exchange. This latter case is what is creating the trailing of capacity in the LEO case.

How devices are priotized?

In our framework devices do not have a priority – instead we calculate the required amount of resources for a procedural exchange and then we calculate – given a specific RAN configuration – how many such resource allocations can be fitted per second. We then assume an ‘overhead’ from the scheduler being inefficient.

What are typical turnaround delays in both CubeSat and GSO cases?

Say a procedural exchange involves transmitting 6 one-way messages back and forth in the MO scenario with 1 additional message (paging) in the MT case. The delay in LEO is 2-30 ms and 120 ms in the GOS case. That is a total propagation delay of 12-180 ms for LEO and 720 ms for GEO. Additionally the time required for the transmissions – the time on air (TOA) – will depend on the size of the messages and the SNR conditions during the exchange. A quick estimate would be 6 ms ToA in a perfect scenario and 6 msgs x4 RUs x16 reps x32 RU duration (3.75kHz) = 12288 ms in a worst-case scenario. An additional overhead would stem from the transmission of the random access preamble (RAP) and any paging.

How quickly does a user switch between one satellite and the next?

The switch between one satellite and another could be as fast as in terrestrial networks. UEs may trigger radio link failure (RLF) and re-select a cell. Should the UE “see” another cell with better link conditions and report it to the eNB, the eNB may initiate a handover as in conventional cellular networks taking – for example hundreds of milliseconds in LEO. Of course this could be problematic if Extended Coverage (EC) UEs are allowed in the cell and a UE attempts a handover at something like 64 repetitions, which could take many seconds to complete in a narrow communication/visibility window.

What is a typical latency for a single LEO satellite (i.e. not a large constellation)?

The latency in LEO can range from 2 to 20 millisecs depending on the orbital height and the elevation angle. The revisit time in LEO depends again on the orbit height and inclination along with the RAN coverage area. This can vary between ~90min to a 12hrs depending on the parameters with a communication window (visibility window) of 20-200 seconds.

Will there be any change in maximum coupling loss as the link budget increases?

Yes, the MCL is defined as a linear function of the link budget. As the distance to the satellite increases so does the satellite lifetime and the cost to launch the satellite into orbit. Thus, it is natural to assume that satellites launched to greater distances are equipped more expensively with a larger power budget for the RAN and antenna dishes which are more directive and have larger aperture sizes, which limits noise.

Are you assuming the GSO system having several simultaneous gNB cells (one in each beam?)

This is not an assumption in our framework for analysis, but in general our understanding is that a GSO would want to provide individual cellular service within each beam to increase spectral and power efficiency. In the bent-pibe architecture this requires a wide feeder-link to be sliced and shifted in frequency for each beam or some other efficient encapsulation of the RAN in the feeder link.

What is the main limitation on the transceiver on the satellite in term of power consumption?

The OFDM transmission scheme has a low power-amplifier efficiency so a satellite, which is already limited in it’s estate for solar panels and power budget, will have to burn a good amount of energy in the amplification process. This is less of a problem in GEO satellites where a higher power budget can be attained, and it is a larger issue with for example a CubeSat. However, CubeSats experience less path-loss due to the closer orbits in comparison to satellites in GSO – on the other hand the lifetime in LEO is only a few years. A CubeSat can provide an NB-IoT RAN at 4W output power, but of course with limited capacity and coverage, but it entirely depends on the satellite configuration and service scenario.

What are the challenges of deploying a GEO constellation compared to a LEO constellation?

In terms of constellations, only a few GSO satellites are required for global coverage whereas LEO requires a swarm – of course LEO can also provide discontinuous global coverage with just a single satellite in polar orbit. The Doppler and delay variations are a challenge in LEO at the link-level and at the system-level, there is the challenge of tracking UEs in tracking-areas (TAs), which in conventional cellular are coupled to specific base stations. In GSO, the delay is a challenge along with the path-loss due to the distance to earth.

What would the capacity for my satellite system be?

Capacity for a system is given by the capacity of the individual stages in our framework: Paging, Random access and procedural signalling+data exchanges. The capacity of a given satellite configuration, accompanying RAN configuration and fading environment is exactly what GateHouse can approximate well with our feasibility study.

What is the typical timeline for conducting a capacity modelling?

A capacity modelling can take a few weeks to a few months depending on the scope in terms of scenarios to investigate and features that are required to be modelled.

How precise is the capacity modelling compared to simulations?

The precision of both analytical modelling and simulation depends on the “models” used for either. A monte-carlo simulation of the NTN protocol and environment is probably the next best candidate realism next to experimental validation IF the simulator takes all the elements of the protocol into account. However, such a simulator takes a long time to develop and it takes a long time (computationally heavy) to run simulations. On the other hand, an analytical framework and models, like our feasibility study, takes less development time and runs very quickly. Currently, to our knowledge, there is no NTN NB-IoT simulator that can give the same KPIs as our analysis for a comparison, and we view our results as good indications or approximations of the performance.

What sort of connection intervals can be supported?

Inter arrival time can be traded-off for the number of supported UEs. In our framework we calculate the capacity in terms of procedural exchanges that can be supported per second for a given type of traffic. So if 50 exchanges of a DoNAS transmission can be supported by second at the system level, that could either be 300 UEs completing a DoNAS exchange every 6th second or 30000 UEs completing a DoNAS exchange every 30 minutes.

What is the capacity of a multicast system?

The capacity of a NB-IoT cell relying on multicast depends on: (1) The MCS of the broadcasting channel, which gives a bit-rate/transmission time for broadcasts and a SNR requirement for allowing decoding of the broadcast, (2) The satellite configuration and resulting link-budget, which fixes the coverage area where the required SNR for the broadcast can be achieved and in turn the number of UEs that can receive the broadcast.

Are you expecting to support multicast by satellite for 5G IoT devices?

Yes as a future feature. The roadmap for our 5G NB-IoT waveform is prioritised according to our customer needs.

Can devices submerged in the sea connect to a satellite or would the device need to be in line of sight?

The first issue with being submerged in water is the additional propagation loss in the water: Water being conductive severely attenuates radio frequencies. So it would be key to stay at a low depth. Better link budget, ie. directional antennas and higher transmission powers, can help overcome this issue to some extend.

The second issue is refraction between the air-water mediums. Refraction happens when a wave travels through a medium of one density into another medium of a different density. This causes the path of the wave to bend and also slows down the speed of the wave which in turn decreases the wavelength, which an underwater receiver must also account for.

In short, the underwater scenario is very challenging and it would be advantageous to communicate at the surface.

How many satellites are needed to have 1 min of tracking?

Depending on the orbit, configuration and antenna system this can be quite different. However 1-2 min of tracking with one CubeSat in LEO at 600km height is possible.

What is the cost of the ‘Earth Moving cell paradigm’ against the earth fixed cell one?

In NGSO, Earth fixed cells will require more advanced antennas leading to higher costs. On the other hand it is easier to manage system wise. The industry is divided on which is better and it is difficult to judge at this time which will be least expensive overall. In GEO it is trivial to have earth-fixed cells in a scenario that is very similar to terrestrial cellular with the exception of additional propagation delay and loss.

Can an old module NB2 be patched to support satellite communication?

Hardware should be re-usable i.e. software-patch-only could be feasible. Some algorithms like uplink transmission segmentation and time-frequency compensation may be too computationally heavy for some chips to do in SW, but will surely be included in HW in the future. The hardware platform must also include GNSS receiver to support compensation algorithms.

How does the 5G NB-IoT NTN standard handle the case that the device is rapidly moving and need to be connected to a NGSO network?

This depends on the situation. First, there need to be feeder link switch-overs in place to secure connection to ground from any satellite. Secondly, the device may have to do cell-reselection if moving into coverage of another cell. If moving out of the tracking area, it will have to do tracking area update procedure (TAU). If a connection is established through one satellite, this connection can be kept even if the device is moving between tracking areas. It may involve a tracking area update of the device.

Does 5G NB-IoT NTN standard require synchronous connectivity between the device and the NGSO network?

Yes, several procedures require synchronous connectivity between device and the network core. There is a study item on agenda for R19 on store and forward functionality. This is to mitigate the issue of discontinuous feeder links i.e. ground connectivity for delay tolerant applications.

Is it really possible to provide service to ordinary 5G devices from space?

The link-budgets and experiments carried out so far show that cellular NTN is feasible with regular handheld devices. One factor to consider is that although the propagation distance is greatly increased, the satellite base-station can utilize directional antennas to achieve large gains.

How is the compensation done on the UE based on GNSS and which information is broadcast?

The UE needs to measure its position and velocity by GNSS in Rel17. There will be frequent broadcasts from the NodeB containing the orbit of the satellite enabling the UE to calculate path loss, timing advance and Doppler. The device will then compensate its uplink signal which means the NodeB will only experience the additional delay compared to terrestrial systems.

Do you provide connectivity services to private users?

No. We are a software development company, developing software protocols for businesses (e.g. satellite operators), who uses said software to offer connectivity services.

Do you provide 24/7 support?

Yes. Standard GateHouse Support and Maintenance Services is carried out during normal working hours. However, our customers have the possibility to procure a 365/7/24 support & maintenance service as an add-on to the basis service.

Would Gatehouse Satcom engage in exclusivity agreements?

In principle no, as we deliver products horizontal to the market. GateHouse acknowledges that some customers are developing specialized solutions and may on exceptional basis agree to a limited exclusivity for a specified market or technology.

Who are Gatehouse Satcom’s typical clients?

For our 5G NTN services, our main clients are satellite operators, who wish to bring 5G NTN connectivity services into their portfolio. Besides software development based on 3GPP standards, we are always eager to develop proprietary space software as well (e.g., for defense projects). We also assist ground infrastructure providers to understand how they can support 5G from space.

For our BGAN products, we typically do business with terminal manufacturers and anyone who wants to perform verification and validation tests - this could be a UAV-manufacturer who is using Inmarsat/Viasat for satellite connectivity.

What do you do at Gatehouse Satcom?

Gatehouse Satcom provides market-leading communications software to the global satcom industry.

During the last 25 years, we have developed more than 15 different software solutions enabling connectivity from space. Currently, we are developing the bi-directional 5G NB-IoT NTN software (the NodeB), which will be compliant to the 3GPP standard. It can be used in satellite systems for GSO / MEO / LEO satellites, user terminals, and ground infrastructure, supporting up- and downlink. In 2023, we started development on the PHY layer for at 5G NR (New Radio) NTN NodeB.

With our "Prove & Deploy" approach we serve clients with studies, demonstrations and software for deployment.

Are the Gatehouse Satcom test tools for off-air or on-air testing?

Yes, we offer test tools for both off-air testing and on-air testing. Our BGAN test tools, BGAN Application Tester and BGAN Network Emulator, are off-air testing, while our 5G NB-IoT test tool, 5G NTN Network Emulator, can be applied both off-air and on-air.

Which DAMA/IW products does Gatehouse Satcom offer?

Demand Assigned Multiple Access (DAMA) and IW (integrated waveform) is a protocol used in satellite communications predominantly within the defense and military sector.

Gatehouse Satcom offers a DAMA/IW Network Controller as well as DAMA/IW protocol components.

Which services does DAMA/IW support?

DAMA/IW supports the following services: Speech, Messaging, Data, VoIP, Unicast, Multicast, and Broadcast. Find more information here.

What is BGAN?

Broadband Global Area Network is a Satellite-based communication network with global coverage offered by Inmarsat. It is used by independent service providers to offer a range of voice and broadband services. The service will enable delivery of Internet content, video-on-demand, video conferencing, fax, e-mail, voice, and VPN access at speeds up to 800 kbps accessed via a small, lightweight satellite terminal.

Can ray tracing be done for different areas, mountains, ocean, desert?

Yes, ray tracing can be done for different terrain and geographic locations. We can also create specific scenarios or use 3GPP fading models.

Is there a difference between cell size and beam size?

Yes, a ‘beam’ refers to the RF or ‘physical’ power from the TX side, which is a continuous function. A ‘cell’ is a logical entity on the RX side in a cellular network and is determined as an area within the ‘beam’ where certain criteria are met: Synchronization and SNR above threshold.

How is it possible to compensate for the Doppler effect in a NGSO setting? Are UE supposed to precompensate for the Doppler effect?

Yes, in Rel-17 the UE will handle the compensation. In the downlink the UE will synchronize to the Doppler shifted NPSS/NSSS signals as usual, it will then decode an ephemeris (a description of the serving satellite’s orbit accurate for a moment, say 1 sec) which will allow the UE to precompensate for the Doppler effect when it transmits in the uplink direction (RACH/PUSCH). This will be the way for NTN IoT (NB-IoT and eMTC) and also NTN NR.

How does the signaling overhead compare for the satellite assistance SIB in LEO vs GEO configurations?

In short, GEO will have little overhead while NGSO and especially LEO will see more overhead, but we expect at most a few percent overhead on the anchor channel. Two SIBs are defined for NTN IoT, the first being for uplink synchronization and the second (to be defined in May) is for helping UEs to predict coverage in discontinuous coverage scenarios, to better enable mobile originating (MO)-traffic. The fist SIB has a fixed size regardless of the use-case, but in LEO it may be necessary to transmit for example once per second (but this will depend on the Orbit, satellite payload GNSS and the band of interest) where in GEO a UE need only receive it once. Overall this SIB should at most take up a few percent of the anchor channel. The SIB for satellite assistance information (SAI) is not defined yet, but we expect it to be of a variable size with plenty of optional parameters. This SIB-SAI is optional and should not be an overhead in GEO. SIB SAI should be expected as overhead in discontinuous NGSO only. The SIB SAI need only be received by UEs once, but the overhead here will again be larger for LEO where the satellite will move faster – a rate of once per 5 or 10 sec should be feasible.

What are the typical messages lengths (in kilobytes) that can be sent and received via satellite NB-IoT? Does it compare with cellular NB-IoT?

The transport block sizes in NTN NB-IoT are the same as in NB-IoT so the difference is in the fading model and the link budget. Provided that the link budget of a satellite payload is comparable to that of a satellite cell the typical message lengths will be comparable between TN and NTN. Basically, you should in most cases be able to expect TN-like performance if the satellite payload is well designed.

Are there any satellite crosslink capabilities providing global coverage and connectivity within one satellite footprint?

Yes, inter-satellite links (ISL) can be used for networking and routing between satellites. However in Rel-17, the focus has been on bent-pipe satellite payloads, i.e.. satellites that act as relays where the ground-station is the actual base-station – so first the focus in a future release needs to switch to regenerative payloads i.e. base-stations onboard the satellite – and then to ISL later. Nothing hinders ISL at the moment – it is just not standardized.

What are considerations for latency for IoT use case?

The latency in NTN is larger than in terrestrial networks due to the larger propagation delay. In some satellite constellations coverage can not be provided continuously on the ground either. So IoT devices for NTN must be delay tolerant.

Apart from UEs and satlinks, is there any need for ground infrastructure to establish 5G IoT communication?

Indeed, the radio access network (RAN) NB-IoT, LTE, LoRaWAN, etc. are just the communication link between UEs and satellites. To make this link useful a link to the core-network on earth should be established. This latter link is known as the feeder link in SatCom terminology and is established between the satellite and large ground-stations. The service link must provide sufficient capacity for the cumulative RAN information (and then some other telemetry) to be exchanged which is why ground-station typically have large steerable antennas and a large transmission power.

Does 5G NB-IoT work on Ka/Ku band?

Rel-17 will work on the S-band, but preliminary work has already been started on the Ka-band. It is likely that higher bands will be supported in future releases. The higher frequencies are a source of wider spectrum/bandwidth for the NTN networks, but there are major challenges involved with higher frequencies – in particular dealing with the increased signal propagation. It could very well be unfeasible to launch ka/Ku band on cubesat payloads due to the limited power budget.

How are your simulations handling dynamics of moving satellites?

In the case of a GEO satellite, the cell will have a static link-budget and the elevation angle toward the satellite does not vary throughout the cell. In the case of NGSO earth-fixed cell, the cell has a fixed position and so would a stationary UE within it, but the link-budget and elevation angles are dynamic and change overt time, so we compute these for a satellite pass. In case of a earth-moving cell NGSO, we have a cell which moves within the cell the link budget and elevation angles are static, but the cell moves over the UE. This is equivalent to a UE travelling within a GEO cell (at approximately 7.3km/s or so)

How is your systems coping with finding satellites when both devices and sats are moving?

3GPP has defined functionality with reference to the channel raster such that UEs will always be able to look for, find and appropriately identify any available channel. The trick is to find an available cell by searching for that particular channel while in coverage of a serving satellite. This can be helped by satellite assistance information.

Is it possible to emulate 5G NB-IoT network links?

Yes, it would be possible to do real-life testing with our in-orbit emulator. Gatehouse Satcom offers tools for both off-air and in-orbit testing that can create controlled and fully configurable environments to emulate various real-world scenarios.

Have you done any study concerning potential interference between terrestrial component and NTN component within the same network?

We have not studied interference between TN and NTN. The networks should be separated in frequency with appropriate guard bands handling Doppler shift in the NGSO case. The bands and channels allocated for NTN and TN are being determined by standardization organizations like ITU, 3GPP and ETSI. As a general rule you can count on interference not being allowed.

Does the beam center move with the satellite movement in NGSO or does it “track” the location of the NB-IOT devices in FOV?

There are two scenarios defined by 3GPP in the NGSO case: 1) Earth-fixed cells, where an NGSO satellite steers its beams such that the cell projected on the ground does not move and 2) Earth-moving cells, where an NGSO satellite has a fixed beam direction, such that the cell moves around with the satellite.

What bandwidth can be reached (in bits per second)?

The peak throughput is a bit less than for terrestrial NB-IoT around 127 kbits/s in PDSCH(DL) and the same in PUSCH(UL) at the link-level without accounting for propagation time. In reality the obtainable throughput will depend heavily on the link-budget throughout the cell and this is a function of the satellite payload. In our feasibility study we can take this evaluation one step further to account for overhead in terms of static signaling and the dynamic message exchanges (an application payload is embedded in a larger message exchange, eg. RA+)

On what frequency band are your simulations derived?

The carrier frequency (band of operation) is a parameter for the configuration of the feasibility study. In general the frequency will change the link budget and the Doppler characteristics.

Are you recruiting people from telecom domain or only from satcom?

Yes, we recruit from telecom as well. We currently have several open job listings on our website. The engineering and management teams at Gatehouse are very diverse, not only having extensive experience in the domains of telecom and satcom, but they are also industry leaders in specific technical areas, such as eNodeB, gNodeB, waveforms and system architecture within the non-terrestrial network technology area. The majority of the team holds a Master´s Degree, while other colleagues have a PHD background specialized in telecom or satcom.

How do you simulate real-life-scenarios in your feasibility study?

In essence, the realism of the feasibility study depends on the configuration of the scenario (input parameters) and the results are generally approximation, worst/best-case results and where applicable they have been compared to similar SoTA results. All modelling is an attempt to deconstruct or approximate reality in a way that we can more easily deal with. In our feasibility-study, we have divided the RAN (radio access network) in three major parts the fading channel, the link-level and the system level. We can develop fading channels based on Ray-tracing, which will be very realistic or use a more abstract/generalized model – or 3GPP standardized models depending on choice. On the Link-level, we do extensive monte-carlo simulations to find the link performance given the chosen fading model. On the system level we have rigorous analytical models, which account for many protocol aspects and signaling overheads (e.g. the various message sequences) – and this level relies on the realism of the two layers below.

Read more about our 5G NTN Feasibility Study

Can you explain the difference between 5G NTN and 6G?

6G will be an evolution of 5G. 6G has not been defined and is expected to incorporate even more of the NTN capabilities. The first 3GPP release that will incorporate 6G is expected with release 21 in 2029.

How does the handover procedure work for NGSO satellites? Can it be done by having the 2nd link up before the 1st one is lost?

Handover of traffic connections resulting from moving NGSO satellites is not supported in release 17 and in transparent mode there is no on-board processing. The procedure and algorithms for handover currently implemented in standard compliant user terminals will not be able to support setting up a 2nd link for handover of the traffic. We expect this to come as part of one of the following releases.

Will there be issues with the use of transparent mode in NGSO systems?

We see two issues with the use of transparent mode in NGSO systems.

1) As the satellites are only visible from both ground station and user terminals in a relatively short time period it is only possible to obtain service in smaller time intervals.

2) Since ground stations must be located in the same satellite footprint as the user terminals there will be large parts of the earth surface like the oceans where is not possible to obtain services.

Will it be possible to use the satellites for data transmission in connected mode or is connectivity restricted to disconnected mode via the random access procedure?

There are no changes to the protocol or services available under 3GPP release 17 for non-terrestrial networks. A PDP Context can be established and maintained for data transmission as for terrestrial networks. Hence it is not needed to apply the random access procedure as long as the connection is not broken. For NGSO communication, the transmission will be likely a few minutes, and for GEO transmission the context can be kept longer active.

What are the solutions to the synchronization in the presence of Dopper spread considering the relatively short satellite visibility time?

With help of the NPSS, NSSS and NRS, a signal can be detected, and during the decoding of it, the frequency offset can be determined. That this frequency offset can be very high and becomes lower, the closer the satellite comes to the UE can be calculated and resolved by the processing algorithm.

How are power consumption and line of sight going to affect hybrid connectivity?

The 3GPP standards specify multimode user terminals that are capable of obtaining service without modifications on both terrestrial and non-terrestrial networks. Tests have been conducted with hardware conforming to earlier releases where only software was modified to obtain service. Hence, power consumption is expected to be equal to previous releases for user terminals working in hybrid mode. To close the link budget user terminals are expected to have line of sight visibility to satellites when used on satellite based networks.

How will the regenerative mode help out on LEO networks?

Compared to the transparent mode (Rel-17), the regenerative mode will include enhancements and optimizations for NGSO satellite systems, considering the moving of the non-Geostationary satellites, enabling efficient blind search of user devices, etc. The regenerative will enable UEs to communicate with the NodeB even when a feeder link is not active, and makes communication everywhere on the globe possible. In the regenerative mode, the NodeB will be located on the satellites themselves.

Which chipsets are expected for TN/NTN dualmode 5G NB-IoT operations?

To support NTN 5G NB-IoT connectivity, common chipsets that can support multiple access technologies, as well control carriers on multiple frequencies, are expected to be used.

For LEO satellites, the chipsets will need to be able to control the timing and frequency drifting, caused by the varying time delay and Doppler due to the satellite’s motion.

What antenna is required for an NTN 5G NB-IoT device?

It is desirable and possible to use the same kind of omnidirectional antennas which are used in terrestrial IoT devices. To compensate for the low device antenna gain, the satellite shall be equipped with a directional antenna with a higher gain. It will still be beneficial and possible for some devices to use a higher gain antenna to obtain a better link-budget.

Will there be spectrum/frequency available in my country?

5G NB-IoT connectivity for NTN and TN networks is expected to be a global standard. Please approach your local ITU, to obtain and apply for spectrum allocation.

How will the network be managed between satellite operators on the non-terrestrial network side (NTN) and the Mobile Network Operators (MNOs) on the terrestrial network (TN) side?

The expected end-vision of the 5G standardization foresees connectivity provision handled by MNOs. This would mean that IoT customers who need connectivity for their IoT terminals, would approach their local MNO, who offers connectivity for dual mode networks (meaning terrestrial and non-terrestrial connectivity – TN and NTN). In this case, the satellite operator would have an agreement with the MNO. Until the standardization has evolved to this point, we expect satellite operators to offer 5G network directly to their customers, with the MNO brought in case by case.

Will there be a seamless satellite handover in a LEO or GSO scenario?

Devices can stay connected to the same GSO satellite, since the satellite is stationary. LEO satellites are moving in relation to earth, and devices will need to continue reselecting different satellites. Otherwise, connection gaps will be experienced. As NB-IoT currently does not support Handover procedures, a message transfer will need to finish during the pass of a single satellite.

How is Doppler effect handled with NB-IoT and non stationary satellites (NGSO)?

Since NGSO satellites (e.g. LEO or MEO satellites) are moving around the earth at very high speeds (can be as fast as 28.000 km/hour), transmission signals are influenced by the Doppler-effect. Mathematical algorithms are helping to reconstruct the transmission signaling by taking into account the (moving) positions of the satellite and device. For this GNSS position information of the satellite will be transmitted within System Information Broadcast messages. The device’s location can either be fixed configured or retrieved via an embedded GNSS module. By doing this, the original signal can be recovered and the uplink transmission can be pre-compensated at the device side.

What latency level is anticipated with GSO and LEO satellites?

With GSO satellites positioned stationary at 36.000 km from Earth propagation delays up to 541 ms will occur. Comparably for a scenario with a LEO satellite on 600 km distance, this will vary between 4-26 ms depending on the position of the satellite in relation to the device for regenerative systems. For transparent systems, as focused on in 3GPP Rel-17, the LEO propagation delay is doubled (8-52 ms).

What link budget is anticipated for NTN NB-IoT?

There will often be direct line-of-sight between satellite and device but the Free-Space-Path-Loss for NTN NB-IoT is higher, due to longer distance. The link budget is calculated separately for up- and downlink. Uplink is favored by the use of single-tone transmission which theoretically adds up to 17 dB gain. Antennas on GSO satellites are typically having a large gain (around 50 dBi) while it is less for LEO satellites. This results in LEO and GSO link-budgets with comparable dB ranges. Calculation on a small-sat LEO case indicates that SNR range for downlink is -5 to 0 dB while for uplink it is -2 to 3 dB (depending on elevation angle and distance between the device and satellite).

In what frequencies does NTN 5G NB-IoT operate?

We are developing our 5G NTN software with L- and S-band reference in accordance with the 3GPP standards in Rel-17. We will be happy to support you with understanding and assessing the exact requirements and potential use cases.

Is 5G NB-IoT more suitable for NGSOs than GSOs?

The 5G NTN standard is working with two different satellite configurations – the 1) Transparent mode, and 2) Regenerative mode.

The 3GPP 5G standardization group has started with the specification of the transparent mode in Rel-17, where the regenerative mode is planned for future releases. The transparent mode fits to both GEO and NGSO satellites.

The standard also looks into the supported frequencies. E.g. the higher the frequency, the more challenges are expected for the performance, as the frequency influences the antenna size. If you want to assess how your satellite set-up is suitable for supporting 5G NB-IoT, please contact us.

How does the 5G NB-IoT software protocol differ for NGSO and GSO satellites?

Major differences are on the satellites´ infrastructure. One example is the different location of the NodeB functionality.

For help in assessing how your satellite system set-up can support 5G NB-IoT connectivity, please do contact us.

Can your simulator be used by cellular operators to find out which satellite gives good coverage and capacity in a specific location?

The feasibility study allows for ascertaining system level KPIs (Sytem capacity, UE QoS (Throughput, latency) and UE energy consumption. This is done on the basis of the scenario definition – so it is indeed possible to define a specific geographic area, say the Himalayas and ascertain the performance of a Cell or a UE in that location.

Which use cases for 5G NB-IoT would work best for GEO vs non-GEO sats?

The use-case would be delay-tolerant applications for both LEO and GEO and GEO has the advantage of providing terrestrial-like cells while LEO has the advantage of providing global (discontinuous) coverage and a lower propagation delay. It is cheaper to launch satellites into LEO than GEO, so typically a GEO payload can be more expensive and justify an increased power budget compared to LEO satellite payloads. The new space-race with cube-sats is especially allowing for low-cost LEO payloads to be launched.

When do you recommend start looking into 5G NTN?

We recommend using the time until devices are commercially available to understand your system set-up and requirements, and the time for testing and proofing the concept within your infrastructure, so that you are ready when Rel-17 can be implemented into commercial systems.

Which projects and studies have you done for satellite 5G NB-IoT?

Gatehouse Satcom has successfully completed an ESA project on 5G NB-IoT for SmallSat networks and we are constantly working with customer projects to help our customers understand capabilities and opportunities applying 5G NTN services. We have built a 5G NTN simulation model which is applied in contributions to 3GPP standardization and in various customer projects. And finally we have built and tested a 5G NTN NB-IoT NodeB being used for various lab and in-orbit testing.

How does Gatehouse Satcom’s software differ from what other players are doing within 5G NB-IoT?

We have a long history developing protocols for the Satcom industry, and we have a vast experience with the complexities that communication via satellite connectivity include.

We are part of the 3GPP standardization group developing the 5G standards and we bring our understanding of satellite connectivity to the 3GPP work.

Furthermore, we offer assessments of future 5G satellite systems for Satellite Service providers to help push 5G technology to the market.

Our experience, expertise and understanding of the market makes our products more mature and agile giving you a superior end-product.

When is the 5G NB-IoT waveform (NodeB) ready?

We are developing the 5G NB-IoT software as part of the official 3GPP standardization group. This means that the NodeB will be commercially available adhering to the standardization´s timeline. This is the beginning of 2024 (for Rel. 18 – for regenerative mode). Rel.17 (transparent mode) will already be commercially available by mid 2022.

To be ready when 5G NB-IoT kicks off officially, we recommend reviewing your system set-up and satellite fleet already today. We will be happy to guide you through that.

How can I as a ground station infrastructure provider support 5G NB-IoT services for satellites?

Your ground station infrastructure needs edge computing capabilities to support 5G. For more details, please reach out to us.

How can Gatehouse Satcom help satellite operators realize their 5G strategy?

We can help satellite operators verify and validate future 5G NTN service performance of their satellite networks based on a technical feasibility study. This includes system capacity calculations, performance trade-offs and suggestions on how to maximize performance under the chosen link/fading conditions and network configuration.

We offer tools for off-air and in-orbit testing that satellite operators can apply to create controlled and fully configurable environments to emulate various real-world scenarios. By demonstrating 5G NTN connectivity, satellite operators gain valuable insights into system behavior and the final business case before investing in a full commercial system and actual launch of the planned 5G NTN service.

Read more about our technical feasibility study and our 5G Network Emulator.

Should I wait looking into 5G NB-IoT, until the standard is commercially available?

It all depends on your business strategy. If the IoT market, especially with devices that only send small amounts of data (Narrowband-IoT) could be an interesting asset to your service portfolio, we recommend lookng into 5G NB-IoT already today.

The reason for this is that it takes some time until you have analyzed your system set-up – to understand any requirements or changes (e.g. for designing a new satellite fleet, or how to adjust your current ones) that you need to realize your 5G strategy.

We recommend starting with this today, to make sure you are ready, once the 5G market takes off. We will be happy to support you with your system assessment.

When will the 5G NB-IoT standard be available?

According to 3GPP´s timeline, the complete 5G NB-IoT standard will be commercially available by the beginning of 2024 (for Rel. 18 – regenerative mode). 5G NB-IoT standard for transparent mode was made commercially available in Release 17.

If you want to know more about Release 17, watch our webinar where we discuss the features and supported use cases of 3GPP´s Release 17.

What does the expected market for 5G NB-IoT look like – what are the use cases?

The 5G NB-IoT NTN market is expected to be around 1.9 billion devices addressable for direct-to-device satellite connectivity by 2035 (GSMA Intelligence)

The NTN services are expected in all verticals, including IoT devices sending small amounts of data and needing connectivity even in the remotest areas.

The need for 5G NB-IoT NTN can range from public services verticals (e.g. rescue), to logistics, agriculture, oil and gas or mining.

Concrete use cases could be for example (1) emergency cars entering remote areas while being able to communicate with the hospital, (2) connected vehicles in the logistics and transportation industry providing information about the arrival of a shipment, (3) IoT sensors used on farms to measure for instance fertilizer levels for optimization of supply chains, or (4) IoT sensors monitoring oil pipelines to avoid downtime and improve safety.

Have a question?

Do you have a satellite communications related question you did not find an answer to in our FAQ?

Please send your question to us and we will get back to you shortly.